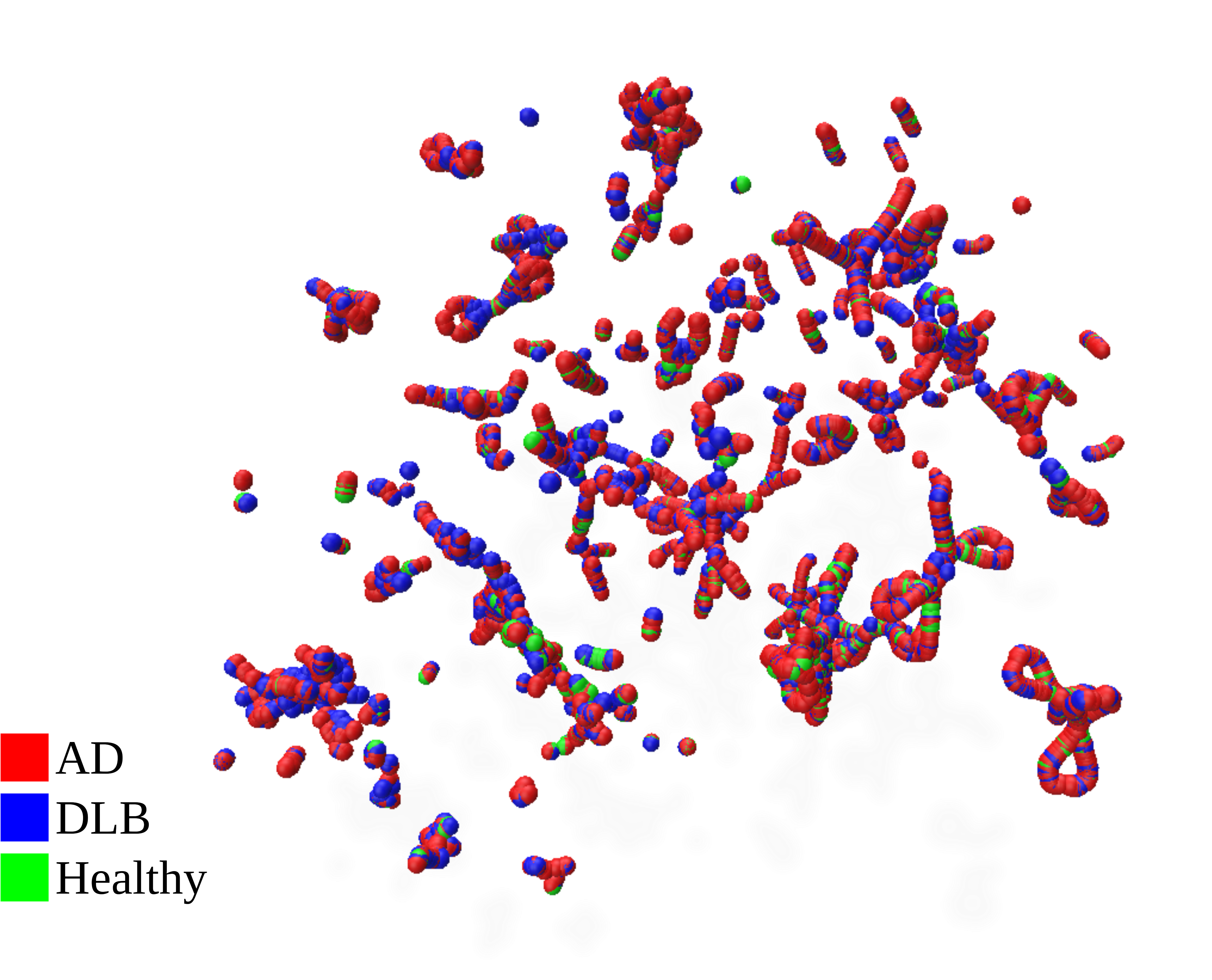

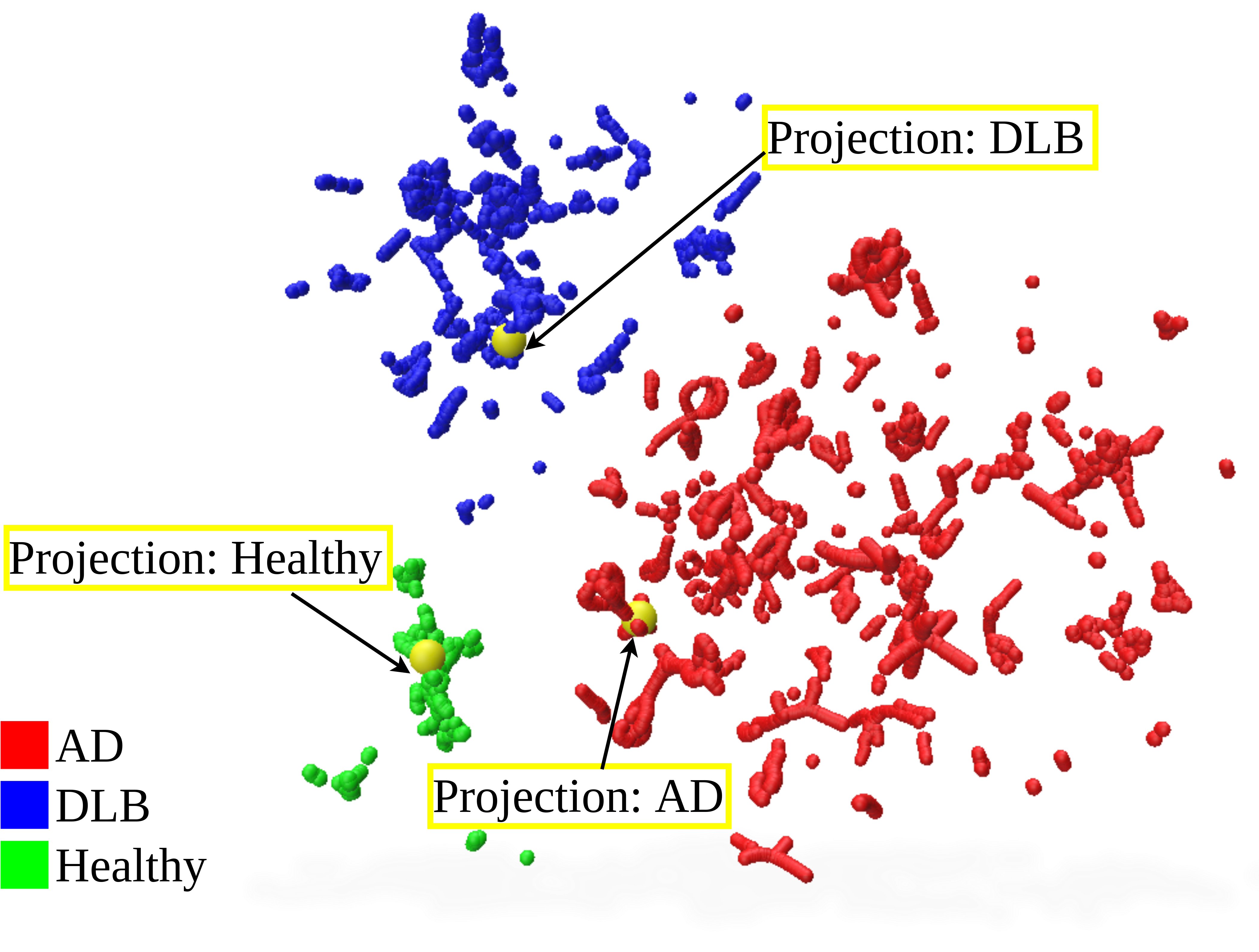

We provide interactive webpages to visualize the Numerical Text Embeddings derived from gait parameters. The embeddings are visualized using UMAP with 3 components, and the projections of learned per-class text features are highlighted in yellow.

Enhancing Gait Video Analysis in Neurodegenerative Diseases by Knowledge Augmentation in Vision Language Model